Algorithmia is a MLOps tool that provides a simple and faster way to deploy your machine learning model into production. Algorithmia specializes in "algorithms as a service". It allows users to create code snippets that run the ML model and host them on Algorithmia. Then you can call your code as an API.. Prepare the model. This step involves training and validating the ML model using appropriate datasets. After that, you have to optimize and fine-tune the model for performance and accuracy. Finally, you save the trained model in a format compatible with the deployment environment.

HandsOnGuide To Machine Learning Model Deployment Using Flask

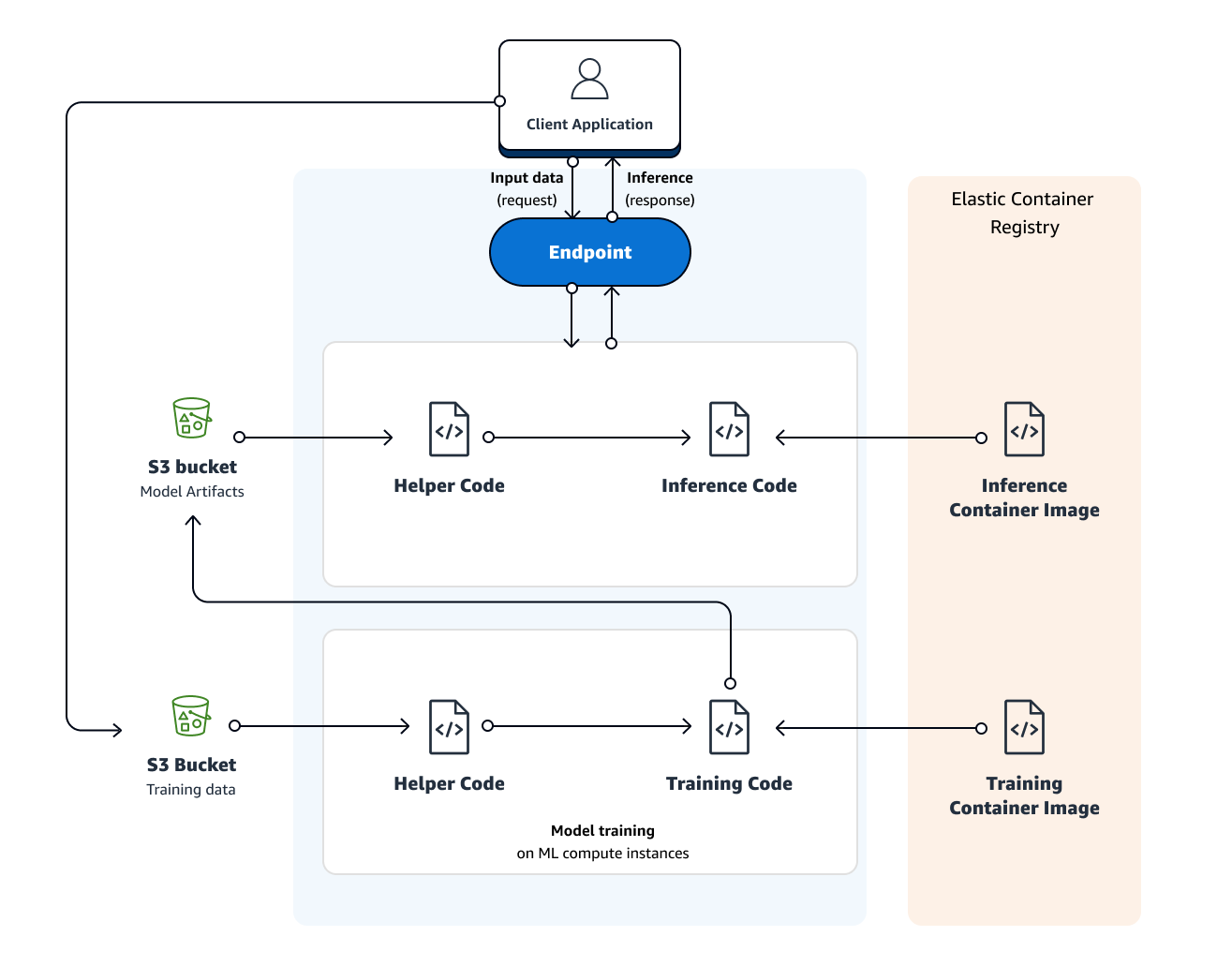

Practical guide to deploy ML models in AWS SageMaker CellStrat

Deploy multiple machine learning models for inference on AWS Lambda and Amazon EFS AWS Machine

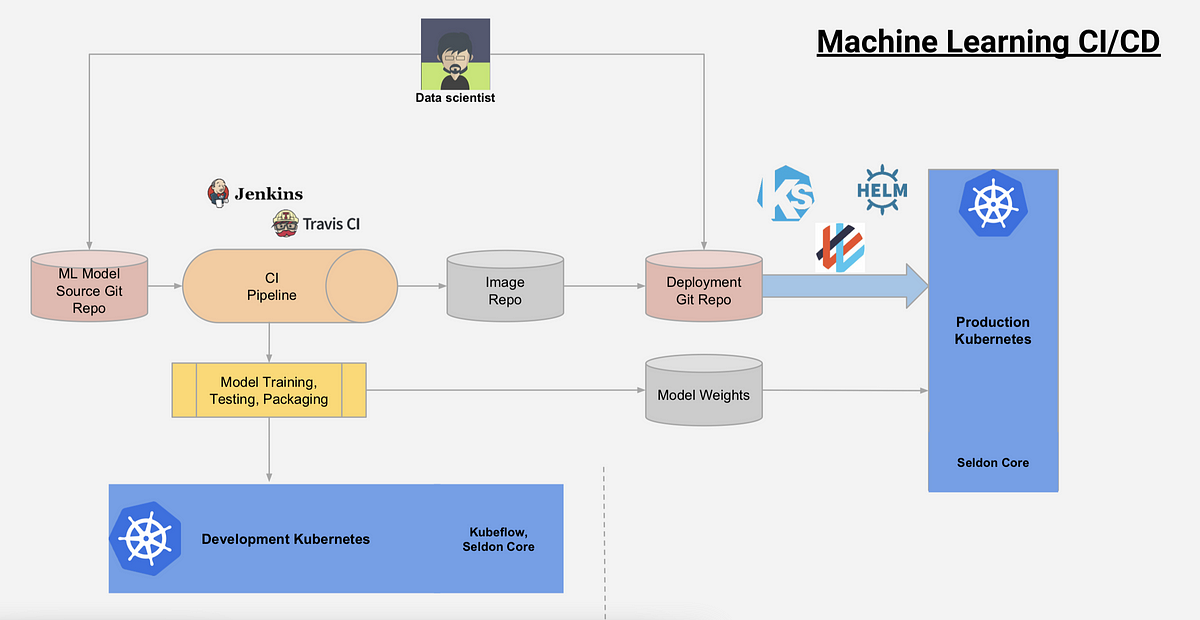

Simple steps to create scalable processes to deploy ML models as microservices Microsoft Open

How To Deploy Machine Learning Models Using FastAPIDeployment Of ML Models As API’s YouTube

Deploying deep learning models Part 1 an overview by Isaac Godfried Towards Data Science

Machine Learning Model Deployment Explained All About ML Model Deployment YouTube

Session 3 Ways to deploy your ML model YouTube

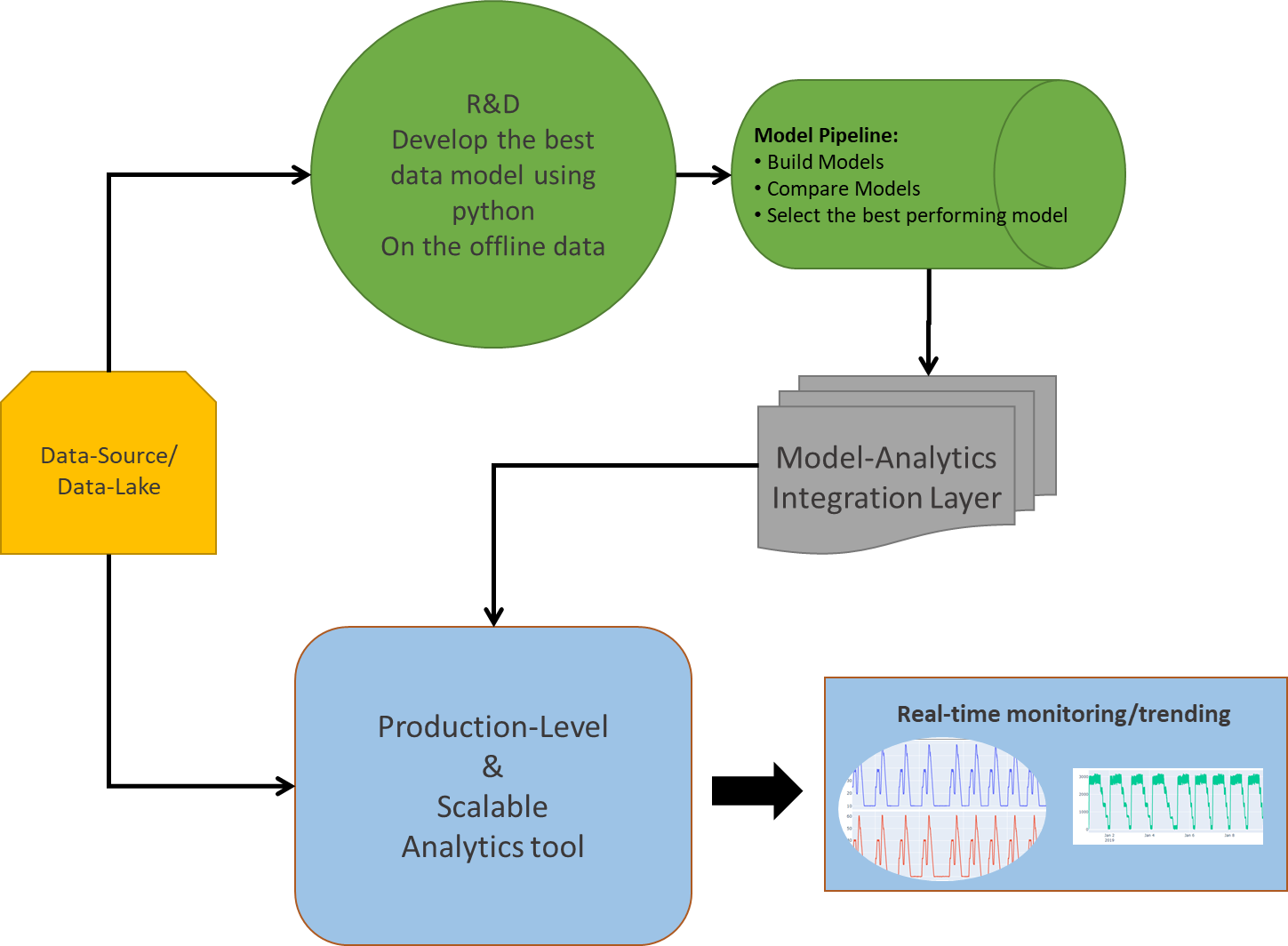

Devising your deployment strategyML Model Manufacturing Data Analytics

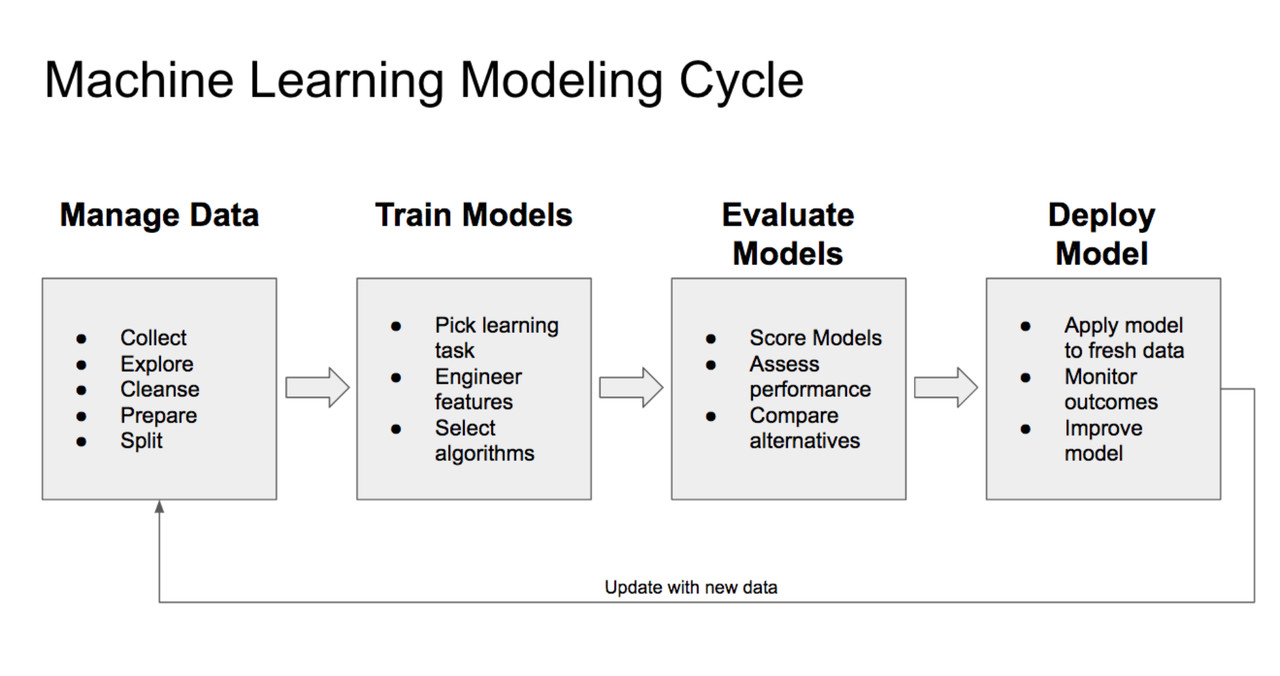

4 Stages of the Machine Learning (ML) Modeling Cycle

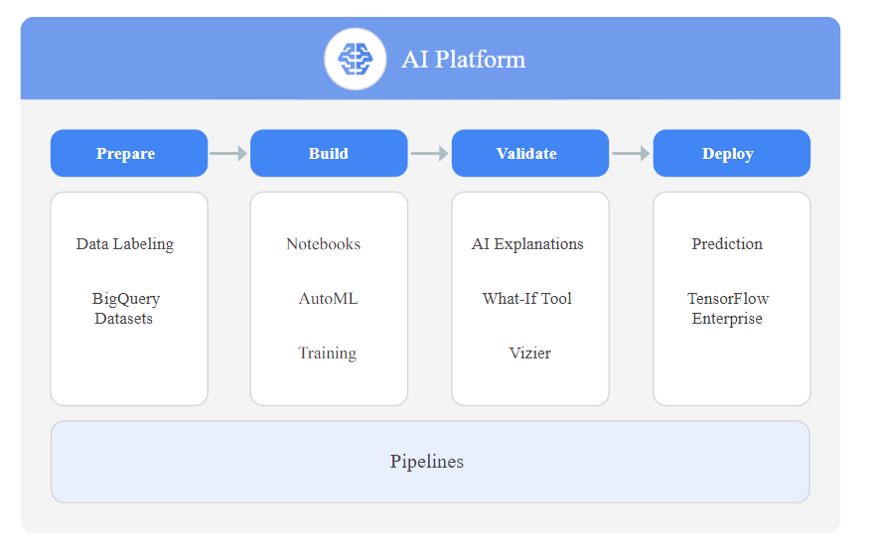

Best practices for implementing machine learning on Google Cloud Cloud Architecture Center

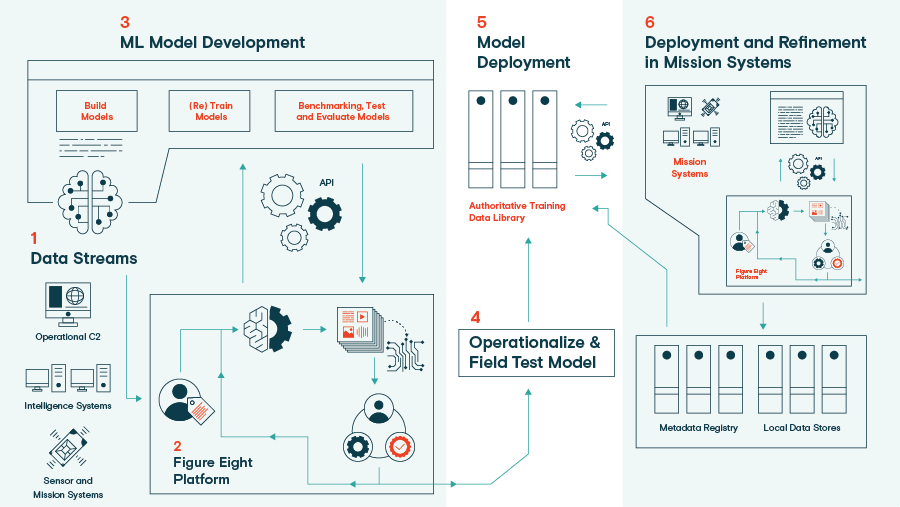

How to Build & Deploy ML Models Figure Eight Federal

From Competition to Production Deploying a Machine Learning Model on Azure trojrobert

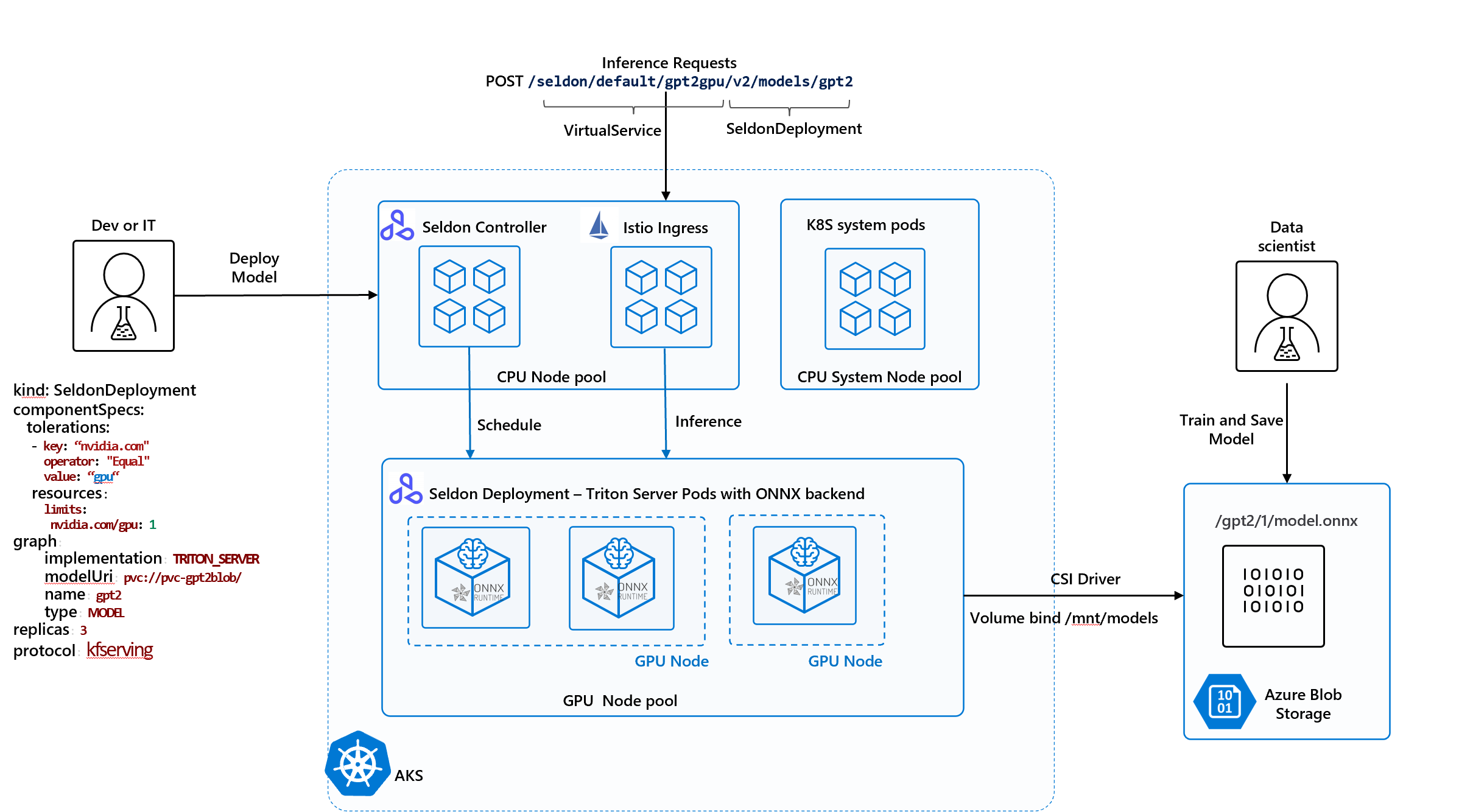

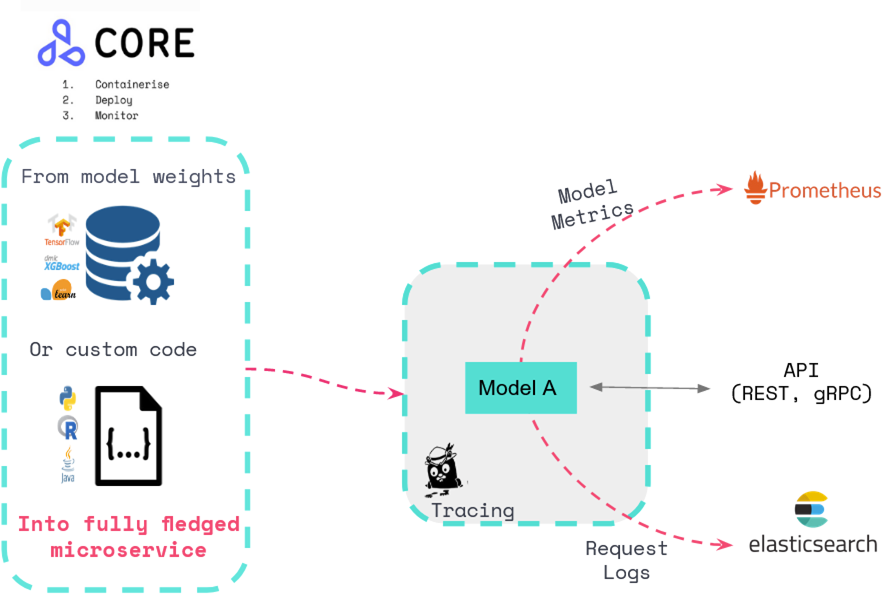

Simple steps to create scalable processes to deploy ML models as microservices Microsoft Open

How to Deploy a Machine Learning Model for Free 7 ML Model Deployment Cloud Platforms Code Zero

ML Model Deployment With Flask On Heroku How To Deploy Machine Learning Model With Flask

How to Easily Deploy ML Models with Flask and Docker

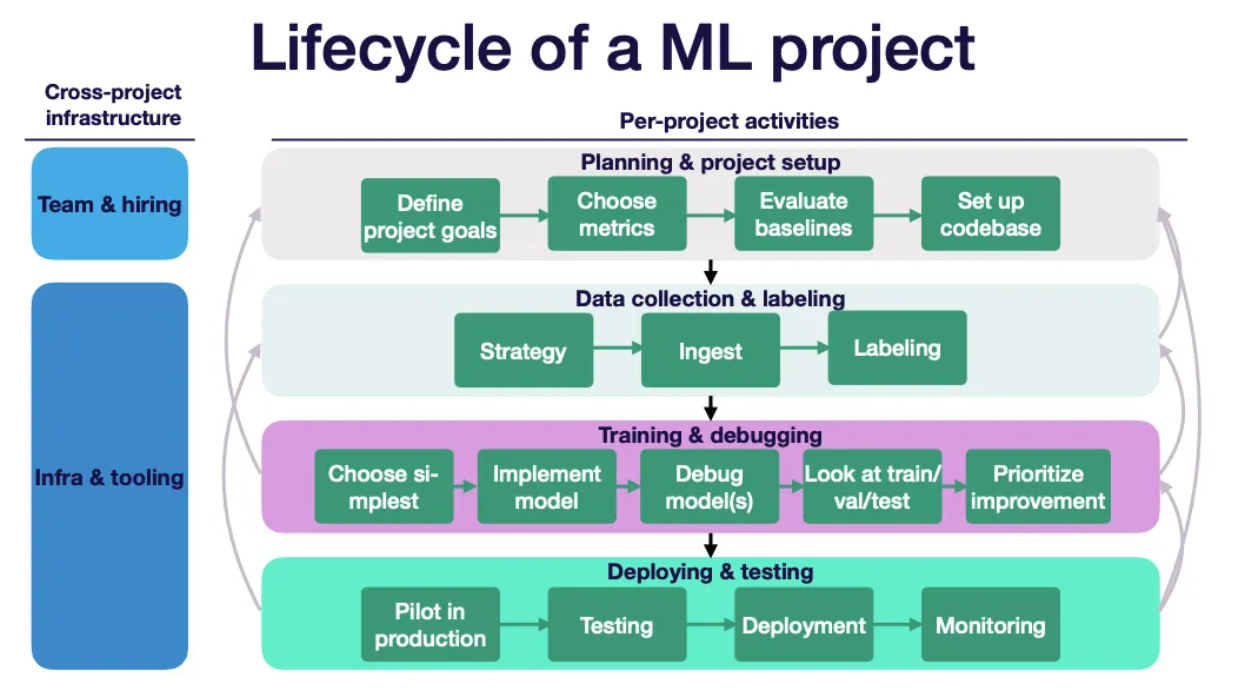

AI Project Run Managing the Life Cycle of an ML Model Le blog de Cellenza

How To Deploy A Machine Learning Model With Fastapi Docker And Github Actions By Ahmed Besbes

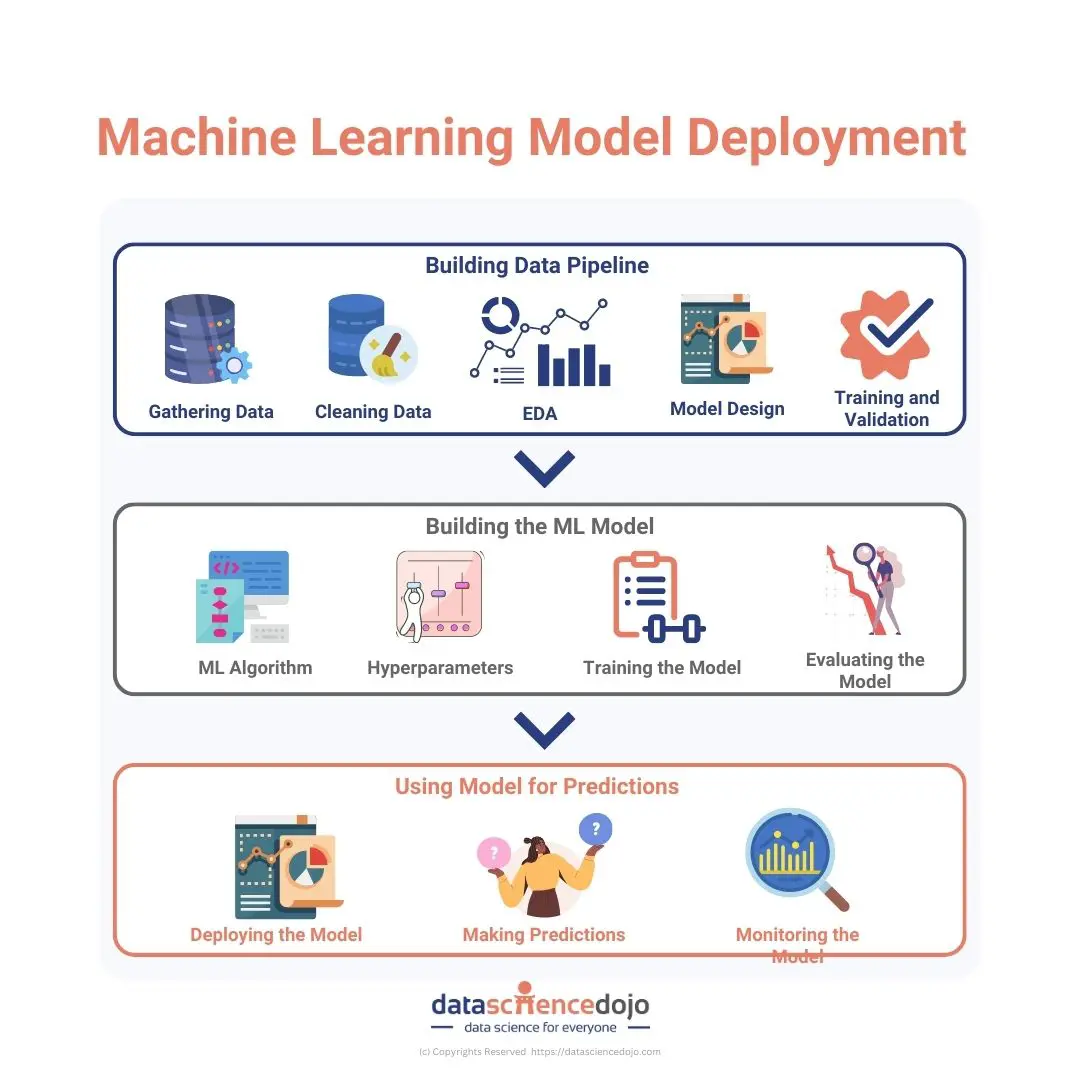

A guide to machine learning model deployment

Learn to deploy a model to an online endpoint, using Azure Machine Learning Python SDK v2. In this tutorial, you deploy and use a model that predicts the likelihood of a customer defaulting on a credit card payment. The steps you take are: Register your model. Create an endpoint and a first deployment.. Docker makes this task easier, faster, and more reliable. 2. Using Docker we can easily reproduce the working environment to train and run the model on different operating systems. 3. We can easily deploy and make your model available to the clients using technologies such as OpenShift, a Kubernetes distribution. 4.